Overview

Prosimo’s multi-cloud networking suite provides full L3 to L7 connectivity. To better meet the performance and security requirements that come with the complexity of the multi-cloud networking environment, we have decentralized our policy enforcement to the network element nearest to the source. This allows us to apply the security policies early, which enhances security, improves performance, and at the same time being cost effective.

In this article, we will introduce the Prosimo policy enforcer, and how we are able to leverage the eBPF technology to push the policy enforcement point as early as possible to achieve the performance and security goals.

Prosimo Policy Enforcer and Edge

Let’s first introduce two important network elements in Prosimo’s multi-cloud networking suite: edge and policy enforcer. An edge is a computing cluster in the Prosimo fabric. It consists of a myriad of functions to serve both networks and users. The policy enforcer is a network element that enforces policy and connects your source network to the nearest Prosimo edge.

Just like how we travel in the real world. Airports in different geographical regions form a complex network of routes that can take you anywhere in the world. But to get to the nearest airport, you need another form of transportation. You can either call Uber to get you directly from your doorstep to the airport terminal, or take public transportation. So, to travel to a different continent, you will first hop on Uber which takes you to the airport near your city, board the plane, and reach the destination.

That is basically how the Prosimo fabric works. The Prosimo Edge is like the airport, while the Prosimo Policy Enforcer acts as your choice of transportation to the airport. The set of Prosimo Edges form a complex network of routes that will safely and efficiently send your traffic from one cloud location to another. The Prosimo Policy Enforcer, on the other hand, is located close to your source network, and its main purpose is to police, encrypt and forward the accepted traffic to the nearest edge.

Enforcer Placement

There are two ways the policy enforcer can be placed in the Prosimo fabric: in the workload VPC, or in the infrastructure VPC.

When the enforcer is in the infrastructure VPC, it is placed together with the edge cluster and is capable of serving multiple VPC’s; on the other hand, if placed in the workload VPC, it resides directly inside the source network’s VPC, hence serving only that particular VPC.

Just like there are multiple forms of transportation from your home to the airport, the placement options give you flexibility about how your source network connects to the fabric.

Let’s now look at how they are different.

Infra VPC

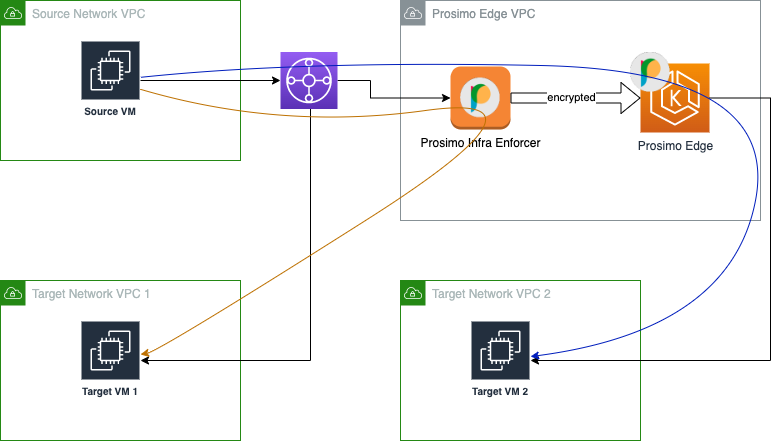

Placing the enforcer in the infra VPC means that the enforcer is inside the same VPC as the Prosimo Edge of the same cloud and region. There are 2 general scenarios about how a connection can flow, as shown in the diagram below:

- The source and destination networks are in different cloud or different region

This is shown in the blue line. The connection reaches the enforcer and forwards to the edge cluster before it reaches the target network. - The source and destination networks are in the same cloud and region. This is shown in the orange curved line, also known as hairpin mode. In this case, the connection bounces off the enforcer, after policy enforcement, and goes right back towards the target network.

Fig 1: MCN IP only overlays that require appliances in every VPC

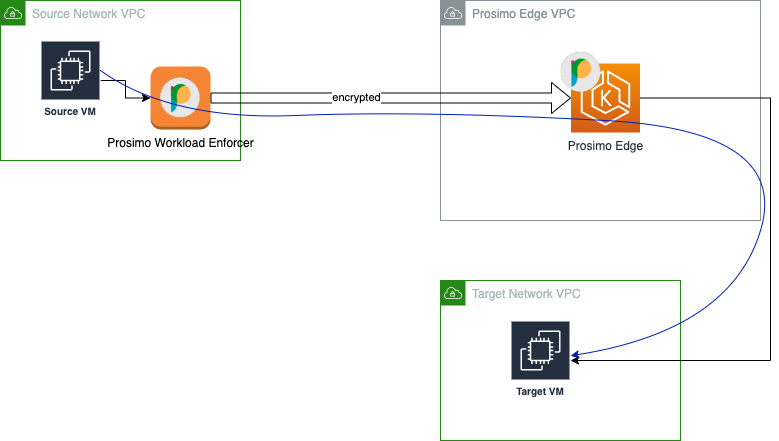

Workload VPC

When a policy enforcer is in the “workload VPC”, it is placed in the VPC of the source VM. In this case, traffic is redirected towards the enforcer via VPC route, and a connection always forwards towards the edge cluster before reaching the destination, as shown in the following diagram.

DC Connector

The third form of placement is an enforcer in the data center. This is similar to the workload VPC placement model where the enforcer is placed directly in the data center network, and the tunnel is formed either via the Internet, or through a private link of your choice. The network policy discussion in this article applies equally in the data center.

Network Policy

Let’s now talk about the network policy.

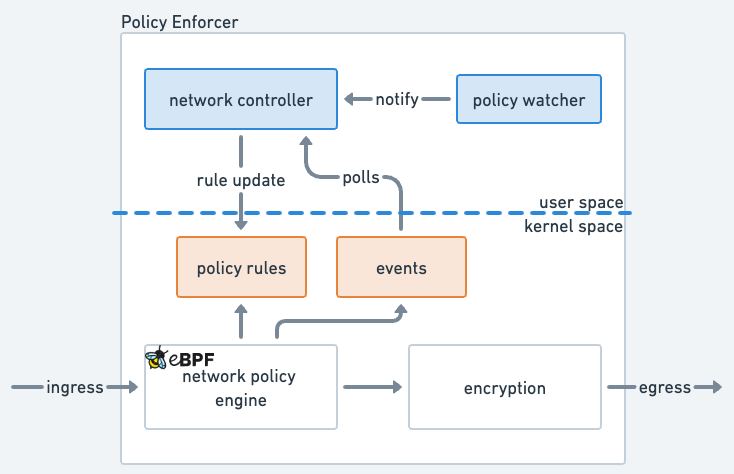

When you install a policy into the Prosimo fabric, that information is converted into different sections and are installed into its respective components. The network part, ie. protocol, ip address and ports, are considered the network policy and are installed into the policy enforcer nearest to the source. The policy is rendered by the network controller, and installed into the eBPF-based network policy engine, where incoming traffic is inspected and can be allowed or denied:

As shown in this diagram, the policy enforcer consists of multiple components for the purpose of enforcing network policy.

Network Controller

The network controller is responsible for loading the policy engine into the kernel, monitoring and installing policy rules, and emits security audit logs. The policy engine is loaded as soon as the policy enforcer boots up, with default rules to reject all traffic for security reasons. The network controller then runs in a passive mode, waiting for notifications from the policy watcher. The policy watcher receives the new policy definition from the user through our messaging channel, transforms it into an internal policy language format, and notifies the network controller. At this point, the network controller installs the rules, and notifies the engine when all the rules are successfully installed. The network controller also polls security events from the policy engine, which will be emitted through the Prosimo analytics pipeline for audit and analysis purposes.

eBPF Policy Engine

The policy engine runs continuously in the kernel using a eBPF program on the ingress network interface. Running the policy engine using eBPF allows all traffic to be inspected by the engine before it passes over to the Linux networking stack for further processing. We have covered the advantages of using eBPF in a separate blog post earlier; here let’s just reiterate some of the advantages with respect to network policy:

- High Performance

eBPF runs early in the packet processing pipeline. This means that the kernel does not waste CPU power on packets that will be discarded. - Safe Execution

The eBPF verifier makes sure that the program is safe to use before it can be installed to the kernel. Unlike a kernel module or DPDK-based packet processing framework, where programming errors can easily bring down the entire system; the eBPF based policy engine very rarely causes a system reboot or deadlock. This is especially important when it comes to deploying solutions in the cloud, whether kernel level debugging is painfully difficult or even impossible. - Cloud Native Approach

The only requirement for running eBPF is that the system runs on Linux with a minimum required kernel version. This allows us to use the same technology regardless of which form factor the connector runs on. These form factors include cloud provider VM, bare-metal devices, or kubernetes clusters.

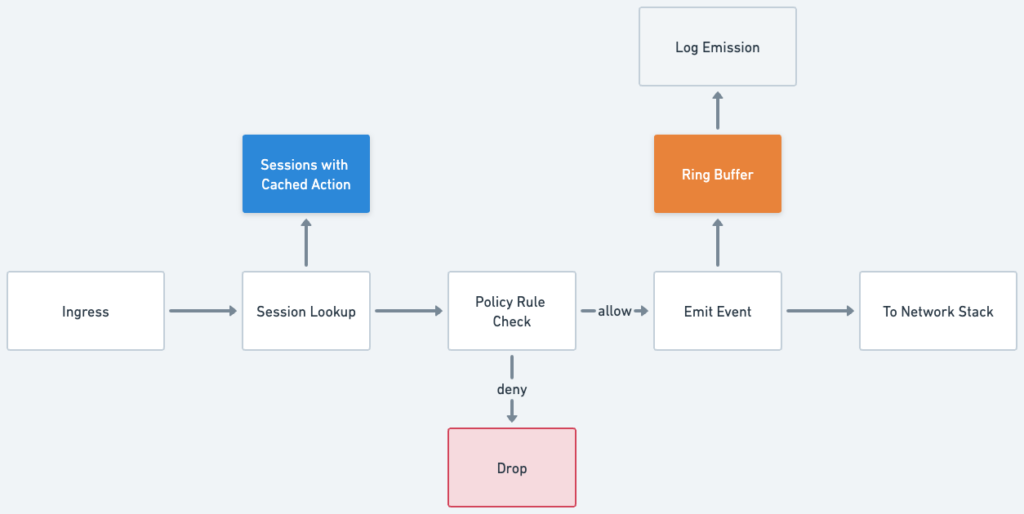

The policy engine extracts information from the incoming packets and looks up in the session cache. If a cached action is found, the policy rule can be bypassed and the packet will proceed based on the cached action, either allow or deny. If this is a new session, we will run through the policy rules and find the proper action. After that, the session will generate an event, which is sent to a BPF ring buffer and retrieved by the log emission use-space program. The diagram below shows the policy execution pipeline:

The connection, after being accepted by the policy engine, moves on to the Linux network stack, where it is encrypted and routed towards the destination based on the optimized routing rules built into the Prosimo fabric. If the session is rejected, a security audit log is generated, and traffic is stopped before it hits the network stack.

Summary

The fundamental reason to run network policy on the policy enforcer using eBPF technology is to push the enforcement point to as early as possible. Take the travel analogy as an example. If a flight is canceled, it makes sense to stop before leaving the house, rather than taking the Uber all the way to the airport and find out that there is no plane to board. Checking the flight status at home saves time and money. Similarly, by running the network policy at the very first checkpoint in the Prosimo fabric and enforcing the network policy before a packet is processed by the Linux networking stack, we are able to filter unwanted traffic with very little processing overhead. Overall, this approach improves system performance, enhances security, and reduces cloud costs.

Stop paying for multi-cloud networking

MCN

Foundation

The onramp to Prosimo’s Full Stack Cloud Networking platform.