Introduction

In an era where artificial intelligence (AI) and Generative AI are reshaping the technological landscape of the cloud, the demand for scalable, secure, and agile cloud networking solutions has never been more critical. The rise of using AI and, more specifically Generative AI workloads, characterized by their dynamic connectivity, performance and security requirements, and dynamic resource allocation needs, presents both an opportunity and a challenge for cloud networking.

AI Apps are different.

The unique characteristics of Gen AI apps (e.g, Amazon Bedrock, Vertex AI, Github Copilot, etc) emphasized on the need for low-latency processing, rapid scaling and provisioning of app endpoints, heavy use of API gateways along with serverless functions, and the ability to dynamically scale resources in response to fluctuating demands, require a specialized cloud networking platform that goes beyond the bounds of conventional network architectures.

Prosimo is very uniquely positioned in the realm of AI as we developed the platform grounds up on the core principles of understanding the applications/services layer with dynamic scaling requirements, use of machine learning to take data-driven decisions, ultra-fast deployment of application endpoints utilizing cloud-native networking constructs which enables the cloud networking and CCoE teams to build the technical foundations for developers for Self-service. These capabilities make Prosimo a platform of choice for Gen AI workloads. From virtualized resources and distributed computing to advanced networking features and AI firewalls with built-in Zero Trust profiles, Prosimo offers AI Suite for Multi-Cloud Networking necessary to support the unique demands of Gen AI.

Customers are serious about AI

Our customers frequently express interest in enabling their developers to explore the potential of Gen AI and Machine learning. Every organization is keen on staying ahead of the curve, seeking innovative ways to empower their developers and application teams to experiment with applications driven by the advancements we see in Gen AI. This urgency is echoed in findings from Gartner, which predict that over 80% of enterprises will have embraced Generative AI through the use of Generate AI APIs or Deployed Generative AI-enabled applications by 2026.

This technical brief aims to delve into the intricacies of multi-cloud networking specifically tailored for Gen AI workloads, exploring the sophisticated connectivity requirements and security considerations, including guard rails and ensuring compliance, developer self-service, and strategic approaches necessary to support the demands of Gen AI workloads using Prosimo.

What are the customer requirements for Multi-Cloud Networking?

During our conversations with customers exploring Gen AI, the networking architecture, platform teams, developers, and crucial technology partners brought up several essential requirements as top priorities for enabling a seamless and consistent connectivity framework across multi-cloud with self-service experience and clear implementation of vital guard rails.

Operational Excellence and Rapid Deployment Framework

Scalability, consistent architecture, and rapid provisioning of resources came across as fundamental requirements where customers want to build a service connectivity mesh across multi-cloud without having to worry about network connectivity dependencies, IP address management, route table propagation, orchestration of cloud-native constructs (ALB, TGW, Private Links, etc). The service Connectivity layer should be detached from the underlying networking corpus as the AI workloads are very dynamic and transient in nature with heavy reliance on APIs and serverless, which cannot be achieved with rapid provisioning of network constructs.

Infrastructure as Code (IaC) compliance is a demand from organizations investing heavily in IaC. They prefer these environments to utilize established IaC tools, like Terraform.

Secure Framework, Guardrails, and Compliance

Security and Privacy Concerns were expressed as customers wanted to understand the cloud network and application communication patterns in order to better understand where they are exposed to risk and whether they can implement guardrails to ensure compliance and inhibit the improper use of accessing specific datasets OR establish an LLM access policy that restricts the public or open models without clear assurances on data privacy and non-public usage of their data.

Self-Service

Developers’ Self-service was highlighted as the utmost priority. The goal is for developers to have a seamless experience without hindrances to innovation. Platform and NetOps teams are acutely aware of their workload supporting other essential tasks.

Cost Compliance

Cost Efficiency is critical, especially considering the hundreds or thousands of active developer environments for Generative AI. It’s vital to show insights into cloud utilization, minimize costs for each environment, and decommission infrastructure with built-in automation that is underused to conserve resources.

Before we map the customer requirements to a deployment blueprint for Amazon Bedrock using Prosimo, let us orient you with Bedrock and its architecture. If you are familiar with how Bedrock works, please skip the next section.

What is Amazon Bedrock?

Amazon Bedrock is a managed service by AWS that simplifies the consumption of Generative AI by making base models from Amazon and other 3rd party providers available via API. The account and infrastructure for Amazon Bedrock are specific to the model provider and are hosted in their accounts, which are owned and managed by AWS. Amazon Bedrock enables the creation of applications that can generate text, images, audio, and synthetic data based on prompts. What sets Bedrock apart is its accessibility to a range of foundation models from leading AI startups, including AI21, Anthropic, and Stability AI, as well as exclusive access to AWS’s Titan family of foundation models. This variety ensures that AWS customers can choose the most suitable models for their specific needs.

Key Advantages of Using Amazon Bedrock via AWS

- Ease of Access: Amazon Bedrock provides an API that opens up a world of foundation models, making it simple to select and implement the model that best fits your project requirements.

- Speed and Efficiency: The service significantly accelerates the development and deployment process of generative AI applications, allowing you to bring your ideas to fruition faster.

- Scalability and Reliability: With AWS’s robust infrastructure, Bedrock ensures that your applications can scale seamlessly while maintaining high reliability.

- Security: Leveraging AWS’s proven security protocols, Bedrock guarantees a secure environment for your generative AI applications.

- No Infrastructure Management: Bedrock’s managed service model eliminates the complexities of managing the underlying infrastructure, enabling you to focus solely on innovation and development

Amazon Bedrock Frontend architecture

Accelerating Generative AI integration with Prosimo AI Networking Suite, particularly Amazon Bedrock, represents a significant advancement in building and scaling generative AI applications. Customers use Amazon Bedrock APIs to create cutting-edge generative AI solutions with unique data sets.

Example Bedrock Use case:

One such example we have seen in a recent customer conversation is using Bedrock for insurance claims, where agents can run simple natural language queries in the claim process. To enable connectivity, for this use case, where the agent web frontend or AI chatbot can be hosted in a different cloud or DC, the customer was exploring solutions to create a secure and private channel between Azure and Amazon Bedrock APIs, aiming to mitigate threats from public exposure on the Internet.

Challenges and CSP Limitations to Meet Agile Networking Demands for AI

Now, access to Amazon Bedrock APIs can be achieved through various methods.

- A quick yet less secure method is the utilization of NAT Gateways, which, while providing broad internet access, does not allow for destination-specific restrictions and incurs costs based on time and data volume.

- AWS PrivateLink presents a more secure alternative to NAT Gateways, offering a dedicated path to the Amazon Bedrock API endpoint. Despite its advantages, AWS

PrivateLink comes with its own challenges:

- Private link is a regional construct, and extending it to other regions within AWS demands additional configuration, such as VPC peering or transit gateways and PrivateLink endpoints in each respective region adds to the complexity of setup and ongoing management

Inherent costs - Security group management is intricate and can be burdensome.

Considering the traffic to Bedrock may originate from disparate VPCs, regions, on-premises environments, and across various accounts, the operations teams require a simplified, unified view of traffic flows for compliance and support. This is further complicated when data sources extend beyond AWS’s ecosystem. The architecture is further complicated by the inclusion of serverless technologies, specifically AWS Lambda, paired with the AWS API Gateway. This combination offers substantial benefits regarding scalability, costs, and deployment simplicity, ideally suited for applications with variable demand.

A Full Stack Platform that enables Networking for traditional and Gen AI - Solution Overview

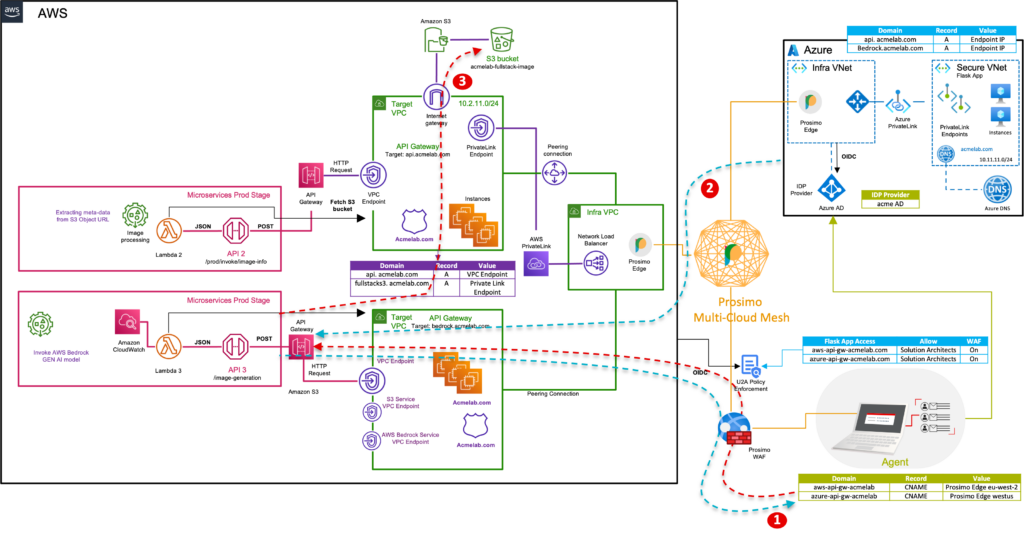

Prosimo AI Suite for Multi-Cloud Networking facilitates secure and dynamic connectivity for Multi-cloud and data centers through Prosimo Fullstack Transit, which builds a secure and high scalable overlay mesh using Cloud native constructs and regional gateways. The Amazon Bedrock service is attached to the Prosimo Transit using the VPC endpoint, and likewise, API Gateway, AWS Lambda functions to invoke the Bedrock API are also provisioned with the complete Self-service workflow. Prosimo extends this private and secure connectivity over the Internet, or enterprise backbone, allowing apps, web frontends, and networks in Azure or Datacenter to interact with Amazon Bedrock APIs without necessitating public IP addresses or managing any complex tunneling architecture to extend the Private Link between regions and clouds.

We will take a deeper dive into the architecture and guide you through the process of configuring Prosimo to provision connectivity for Bedrock using native networking constructs (TGW, VNet peering, VWAN hub, etc), Private link for secure access to Bedrock APIs via VPC endpoints, Lambda function to invoke the Bedrock APIs through API gateway and Prosimo Infra components, This ensures the secure development of generative AI applications using proprietary data.

Bedrock Connectivity with Prosimo AI Networking Suite

The objective is to construct, orchestrate, and secure the connectivity between the source app in Azure and Amazon Bedrock API in an optimal and scalable fashion where Prosimo abstracts the underlying complexity and builds a very dynamic and agile fabric for workloads with a shorter lifetime, such as AWS Lambda functions invoked for LLM queries. Prosimo Edge gateways are provisioned with cache engines to locally cache common results to reduce latency and data transfer costs.

In the following diagram, we depict an architecture to provision your infrastructure using Prosimo to read your proprietary data residing in Amazon Dynamo DB (or RDS as PaaS) and augment the Amazon Bedrock API request by generating images for your use case when answering image generation-related queries from your generative AI application. Prosimo centralized AI firewall allows you to apply guardrails based on a rich AI policy engine to ensure compliance. Although we use Dynamo DB for illustration purposes, you can test the private access of the Amazon Bedrock APIs end-to-end using the instructions provided in this post.

Pre-Requisites

- AWS and Azure cloud accounts have been successfully onboarded onto the Prosimo Platform, with the requisite privileges assigned.

- Prosimo Edge Gateways have been deployed in the regions corresponding to where the workloads are situated.

- Optionally, an Identity Provider (IDP) of choice has been onboarded for the Zero Trust Network Access (ZTNA) use case.

- Access to the foundational model has been requested through the Amazon Bedrock console.

Connectivity Workflow

Configure a source application to run the LLM query; for the scope of this document, we have used a Flask app configured with an AI chatbot, hosted on both AWS and Azure and connected to Prosimo Edge in Azure and AWS source regions using Azure Private Link and AWS TGW. Prosimo uses the permissions provided in Day-0 onboarding pre-requisites to orchestrate and provision Private link endpoints, NLB and AWS Transit Gateway, TGW attachments, route programming, and adding the required security groups.

DynamoDB, built on serverless architecture, is hosted in AWS in the target region attached to Prosimo using Transit Gateway

Prosimo Edge gateways discover and form an encrypted overlay mesh over the internet or private transport. The Edge gateways use unique identifiers to do the discovery and any new edge, part of the same customer Tenant, automatically gets added to an existing mesh. Likewise, in the case of brown failures or regional disruption, the traffic gets routed to the closest edge to the source to provide full mesh redundancy.

When establishing a new environment without pre-existing edges, the creation of the first Edge is triggered by the onboarding of the first application within a cloud region. Consequently, the user traffic begins to be directed through this Edge. As additional applications are integrated, the already operational Edges are leveraged, and connectivity from these Edges to the new applications is established.

API calls from the source flask app in Azure are directed to the Amazon API Gateway via Private links, subject to VPC endpoint policies that grant access to the API Gateway.

Prosimo’s security policy framework will facilitate this by acting as a front for the Amazon API Gateway, which is integrated into the Prosimo fabric.

AWS Lambda functions invoke the AWS Bedrock API. These API calls are then routed to the Amazon Bedrock VPC endpoint.The Amazon Bedrock service API endpoint receives requests via PrivateLink, navigating around the internet.

Finally, Amazon Bedrock responds to the Lambda function, which processes the data and stores the results in the designated Amazon S3 bucket, utilizing AWS PrivateLink for secure data transfer to Amazon S3.

This architecture, while intricate, provides a robust and secure method of leveraging Amazon services, ensuring that both the connectivity and the data remain secure and efficiently managed.

Live Demo: Multi-Cloud Networking with Prosimo for Amazon Bedrock

Connectivity and Security Considerations for AI Workloads

Service Layer Connectivity

- Identifiers are no longer IPs but FQDNs and tags

- Requires Proxy services for TLS termination, URL rewriting, DNS

- FQDN attributes and Tags to define policies

The process of directing traffic towards Prosimo guided by DNS rerouting, takes on heightened significance in the cloud environment where dynamics are inherently more complex and fluid than in traditional networking. As PaaS or AI Apps are integrated using native networking constructs, Prosimo builds a Service networking layer where endpoints are identified using FQDNs and labels instead of just IP Addresses. The Zero Trust policies are also configured based on PaaS or FQDN endpoints with HTTP methods that move with the workload, giving the flexibility of moving and dynamically scaling resources without plumbing networking paths or re-IP considerations.

Private Link/Service Connect for AI workloads endpoint connectivity

The integration of target application endpoints into the Prosimo fabric, particularly through the Private Link demonstrates its exceptional utility in cloud environments. This functionality provides secure and direct access to applications and sets a new benchmark by establishing private connections to source endpoints without the conventional route advertisement. Prosimo addresses some of the CSP native gaps identified earlier with the overlay mesh and connects source apps and networks to target applications hosted in different regions or clouds without any IPSec or NAT Gateway requirements. Source apps and networks are proxied to services injected into their VPC/VNET. These networks are not peered with target networks and are isolated except for services that are connected from the Prosimo transit.

Another challenge that typically emerges with PaaS and AI workloads is the use of Ephemeral/Dynamic IP Addresses. CSPs usually put PaaS like DynamoDB, RDS, or Bedrock behind load balancers to maintain a robust infrastructure and SLA that translates into constantly changing IP addresses. With serverless functions as targets or Amazon RDS, it is almost impossible to keep making changes to Firewall/routing policies updated with ephemeral IPs for PaaS. Prosimo Service Core capability is designed specifically to address the dynamic IP changes for AI services, Lambda functions etc by mapping link-local IP addresses for services that make the routing and policy updates a lot simpler.

AI Firewall and Guardrails

In traditional networking firewalls has played a critical role in preventing network attacks. The firewall started off with a per application firewall and quickly moved towards a centralized approach where multiple application traffic can be inspected by the same firewall. The economy of scale makes such a deployment feasible and also allows a much better firewall rule evaluation, given that the firewall can now see a lot more traffic and detect patterns across different applications.

With the advent of Gen AI, these concepts would also need to be extended to diverse and different multiple Gen AI applications across an enterprise. These applications may be in different regions and across different clouds. As described above, Prosimo will allow a unified MCN fabric to carry the traffic for such applications securely. Moreover, the Prosimo architecture inherently allows custom AI firewall policies to be applied uniformly across all Gen AI apps.

An example of firewall in Gen AI, is open source NeMo Guardrails. There are other similar products that exist either as paid service or inhouse implementations. Enterprise could spin up a centralized AI firewall, and deploy it in all regions. Then use Prosimo to pass all Gen AI traffic via these firewalls. Prosimo allows shared service insertion, which can divert traffic or specific destination / application via a shared service such as AI firewall. This allows SecOps, to control request / response for Gen AI applications. Using Prosimo all enterprise Gen AI traffic can have uniform and robust Gen AI guardrail enforcement.

Unlocking the Full Potential of AI with Prosimo AI Suite for Multi-Cloud Networking

Prosimo’s AI Suite for Multi-Cloud Networking for Gen AI and traditional AI applications streamline the deployment and management of AI infrastructures, offering scalability, security, and operational efficiency to meet the demands of modern enterprise AI initiatives. The pre-defined Application connectivity templates and AI Firewall empower cloud networking teams, developers, and data scientists to experiment Gen AI with complete autonomy by automating and abstracting the integration and management of cloud infrastructure including networking constructs, highly scalable and dynamic fabric with guardrails.

Enterprise platform teams gain access to networking suite tailored for Gen AI applications, offering several advantages:

- Self-Service Experience: Prosimo offers self-service APIs that allow developers and data scientists to independently deploy, view, and manage their GenAI applications and infrastructure. This autonomy is facilitated through detached underlying networking and application workflows, enabling efficient resource management and application deployment across multiple cloud environments.

- AI/ML Ecosystem Support: Prosimo provides comprehensive support for leading LLM providers, including Amazon Bedrock, Vertex AI and others. This ensures that developers have access to the necessary tools and platforms to build and scale their GenAI applications effectively.

- Cloud-native Orchestration: With Prosimo, enterprises benefit from pre-built connectivity and policy templates compatible with public clouds. This flexibility ensures seamless orchestration and deployment of AI applications, regardless of the cloud environment.

- RBAC and Compliance: Prosimo enforces robust Role-Based Access Control (RBAC) mechanisms, allowing individual teams, Business units, developers, data scientists, and researchers to manage their environments securely. This isolation ensures that while users can manage their own projects, the integrity of platform team-designed templates is preserved. The AI firewall provides a centralized enforcement gatekeeper with a rich policy ruleset to enforce compliance for allowed data sets.

- Chargeback & Showback: To ensure transparent and accountable resource usage, Prosimo provides detailed financial metrics for each project. This includes both chargeback and showback capabilities, allowing for precise tracking of AI application costs across different cloud platforms.

- AI Stack Observability: With is the implementation of anomaly detection throughout the AI stack. This includes application-level visibility into cost, traffic, and resource attribution, providing a comprehensive overview and enhanced control over the AI infrastructure.

For developers striving to streamline their AI workloads, and enterprises aiming to elevate their AI initiatives with unparalleled efficiency, the Prosimo lab is a treasure trove of insights and practical experience. It’s not merely about understanding the mechanics—it’s about experiencing the real agility and freedom that Prosimo brings to your cloud endeavors.