As we introduced before, Nebula is the new Generative AI Chat. In this section, we dig deeper into the world of Generative AI.

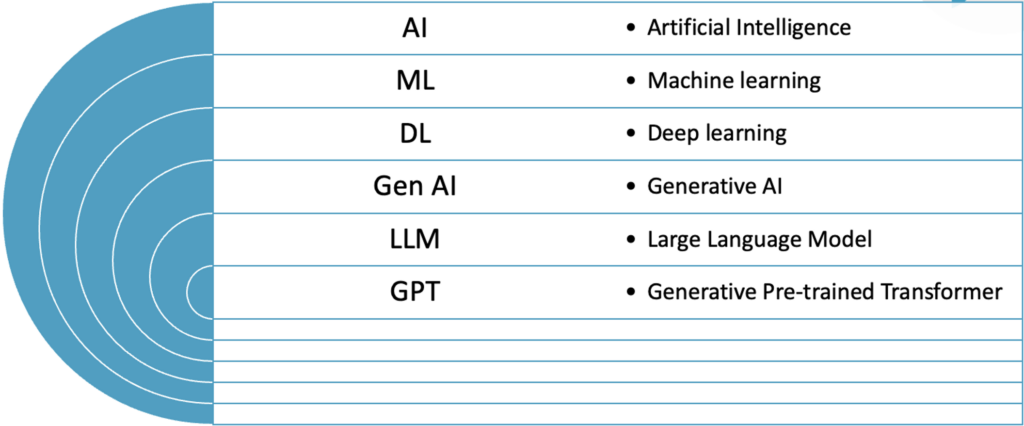

With the explosive growth of AI, a lot of terms and acronyms have been brought to the forefront – Generative AI, LLMs, NLP, ML, AI, DL. To begin with, let us give our take on how we think about these and set the context for the rest of the blog.

Note: There are other ways to look at this hierarchy in literature, but we will stick to this for out blog

We will dwell on Gen AI (Generative AI) – this is a broad field. Apart from LLMs (Large Language Models), which are considered to be a part of Gen AI, the other things in Gen AI are:

- GANs (Generative Adversarial Networks) may be used for a variety of purposes, including image generation or improving a base DL model

- Stable diffusion – which also generates images

- OpenAI Jukebox or MuseNet – which generates music

LLMs are trained in understanding and generating text in the Gen AI umbrella. This leads them to be different capabilities, such as:

- Summarizing text

- Translation text

- Understanding text

- Generating text

GPTs (Generative Pre-trained Transformers) are particular types of LLMs that use Transformer architecture. This is the basis of the popular AI chatbots such as ChatGPT.

Text generation via LLMs is learning from a different text corpus and coming up with the probability of the next word. For example, if you query a decently trained LLM, “Mary had a little.” The LLM then comes up with different words and probabilities# for the next word.

- “lamb” – Probability: High

- “girl” – Probability: Moderate

- “house” – Probability: Moderate

- “sister” – Probability: Moderate

- “pet” – Probability: Moderate

- “cat” – Probability: Moderate

- “dog” – Probability: Moderate

- “horse” – Probability: Low

- “chicken” – Probability: Low

- “duck” – Probability: Low

The response is then fed back to generate the next word and the next word – which may eventually return the entire poem. [1]

Mary had a little lamb,

Its fleece was white as snow;

And everywhere that Mary went,

The lamb was sure to go. …

Why Nebula?

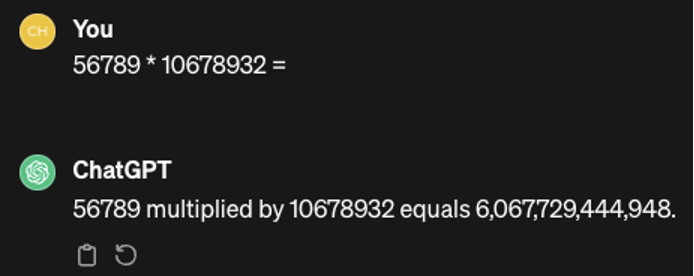

However, pure LLMs could be better at other tasks such as math, since they would be trying to find the next words rather than do the math. For example [2] –

However, the correct answer is 606445869348. Close, but no cigar. This is a direct result of how LLMs are trained and work, and since they are just dealing with language, they can’t work as calculators out of the box. So, in this case, the user is expecting LLMs to perform actions that it is not built to carry out (typical case of this is a feature, not a bug).

So when we built Nebula, our Generative AI Chat, we needed the best of LLMs and had to train it differently and architect it to handle cases that demand capabilities beyond LLMs.

[1],[2] – From Chat GPT 3.5 on the time of writing the blog