Preface

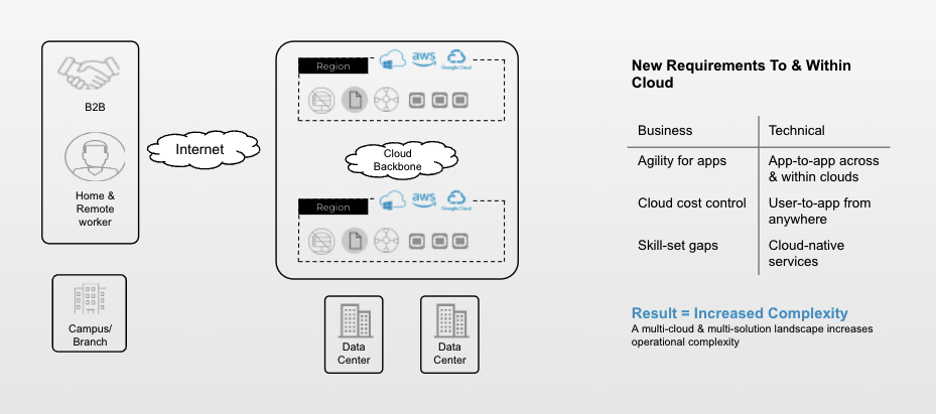

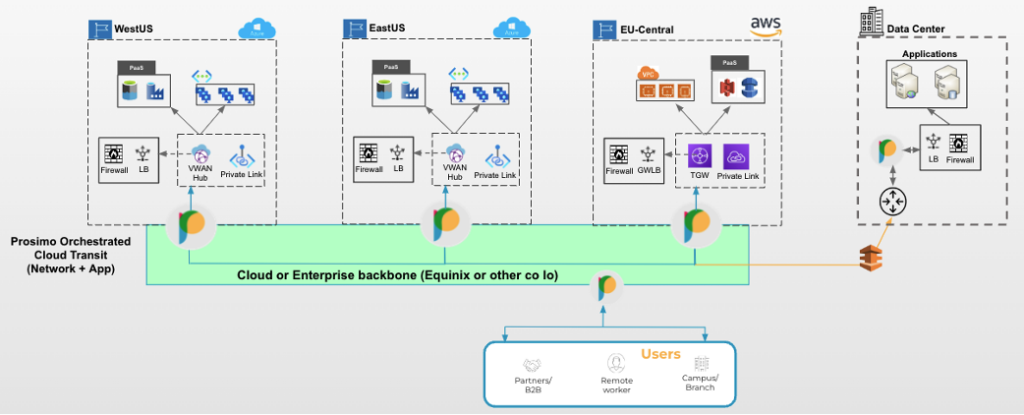

As the move to the cloud accelerates, a high-performance cloud network interconnecting applications and users is a key requirement. Enterprises and other organizations, including those in government and education, are building fully distributed application environments that can run in private, public, and hybrid clouds to include edge locations. The lack of a secure and agile cloud network solution is a barrier to this transformation. Legacy network architectures are designed for self-contained enterprise networks connecting to private clouds. While this approach has been repurposed for public cloud environments, it is not optimized for cloud-native networks and workloads.

To support the growing diversity of clouds and application infrastructure the connectivity layer must be table stakes: the conversation must move to performance, reliability, and built-in security. Enterprises need comprehensive visibility, monitoring, and proactive network operations to ensure a successful migration to a cloud-native paradigm.

Where is cloud networking today?

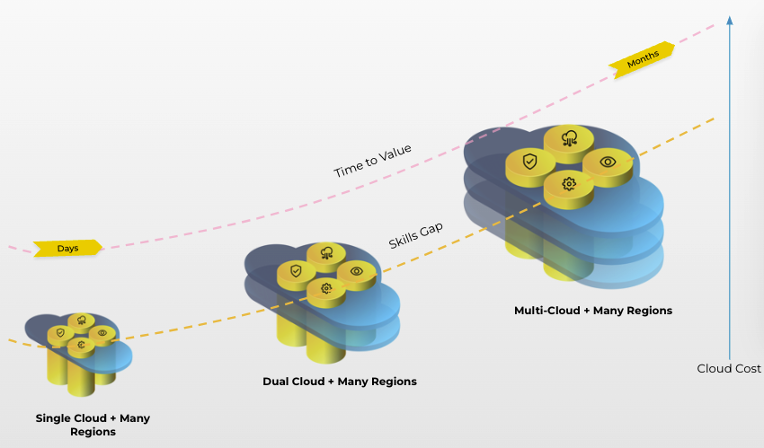

According to IDG’s 2021 cloud computing survey, 92% of organizations’ IT environments are at least somewhat in the cloud today with more than half (55%) of organizations currently using multiple public clouds; 21% say they use three or more. Though architectures have evolved in other areas to support this transition to multi-cloud, the network has fallen behind. Deploying cloud networks within existing architectures is complex, inconsistent, and costly. Increasingly distributed applications across multiple clouds demand automated, secure, and high-performance multi-cloud networking.

Challenges

- Poor security and governance controls in cloud – problems magnify in multicloud.

- Lack of visibility and control with native cloud networking constructs alone.

- Tool sprawl as a result of multicloud expansion increases troubleshooting time and costs.

- A mix of next-gen and legacy applications without a reliable and consistent way to connect them.

The need for App-to-App Transit

Modern, cloud-native architecture

- In your administrative control

- Built using containerized services packaged as Kubernetes clusters in EKS

- Provide elastic capacity with auto-scaling capabilities based on load without spinning up instances manually

Simplified operations

- Prosimo is responsible for the life-cycle management of clusters including provisioning, upgrades, and patches

- Use cloud-native constructs (TGW, Private link, etc.) for network peering

- No IPsec tunnels or throughput limitations between VPCs or data centers

- When integrated as the default gateway no BGP advertisements are required for Layer 3 connectivity

- Prosimo on-premise edge runs as a virtualized form factor, no dedicated hardware appliances

Network and port-level segmentation

- Prosimo can segment traffic based on ports and protocols in addition to subnets and VPCs in an AWS region

Cloud cost control

- Reduction of cloud costs as resources are only provisioned when needed

- No IPsec tunnel charges

Deep visibility and insights

- Detailed visibility helps minimize mean-time-to-resolution (MTTR)

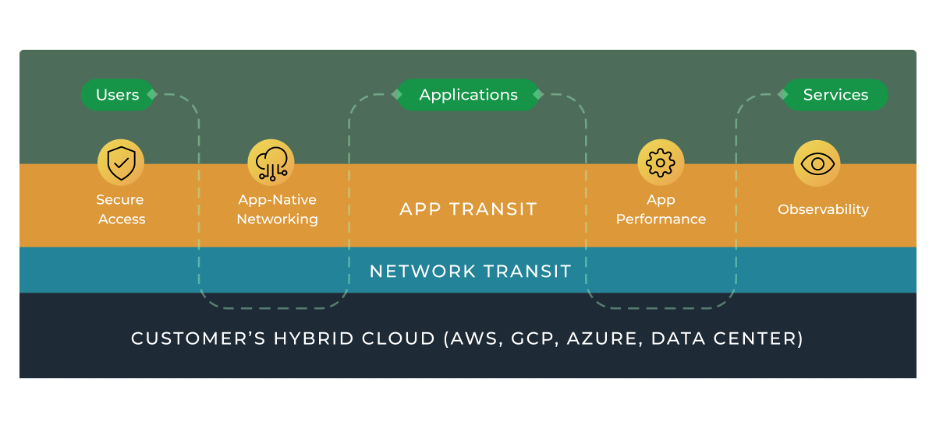

Why is a full-stack cloud transit needed?

IaaS, PaaS, and application endpoints need to communicate at various levels. The IaaS apps running in a VPC or VNET need connectivity, security, and optimization at the network layer. PaaS or serverless can only be attached at the application layer using a FQDN. Operating this complexity increases magnitudes as enterprises move across other clouds and regions.

To ensure the consistency and simplicity of their cloud environment, enterprises need to build an intelligent fabric that seamlessly interconnects these services and understands applications to ensure security and performance.

What can the full-stack cloud transit deliver for enterprises?

A consistent architecture reduces the complexity of connecting applications, services, and networks across clouds while addressing operations, security, and performance requirements. This architecture delivers the following outcomes:

Network Transit outcomes

- Multicloud made easy: connect public and private clouds with an elastic architecture that leverages cloud-native constructs

- A network as scalable as the cloud: scaleable transport with cloud backbone, edge PoPs, and cloud-native gateways in regions and at the edge of cloud environments

App-to-App Transit outcomes

- Application performance: Prosimo’s full understanding of application types provides up to a 90% improvement in performance

- Secure access: Zero Trust access leveraging IDPs and a modern approach to secure access to public cloud workloads substantially reduces risk to the business

- Observability: Solve problems faster through visibility from L3 to L7 to diagnose security or performance as well as differentiate between network and app problems

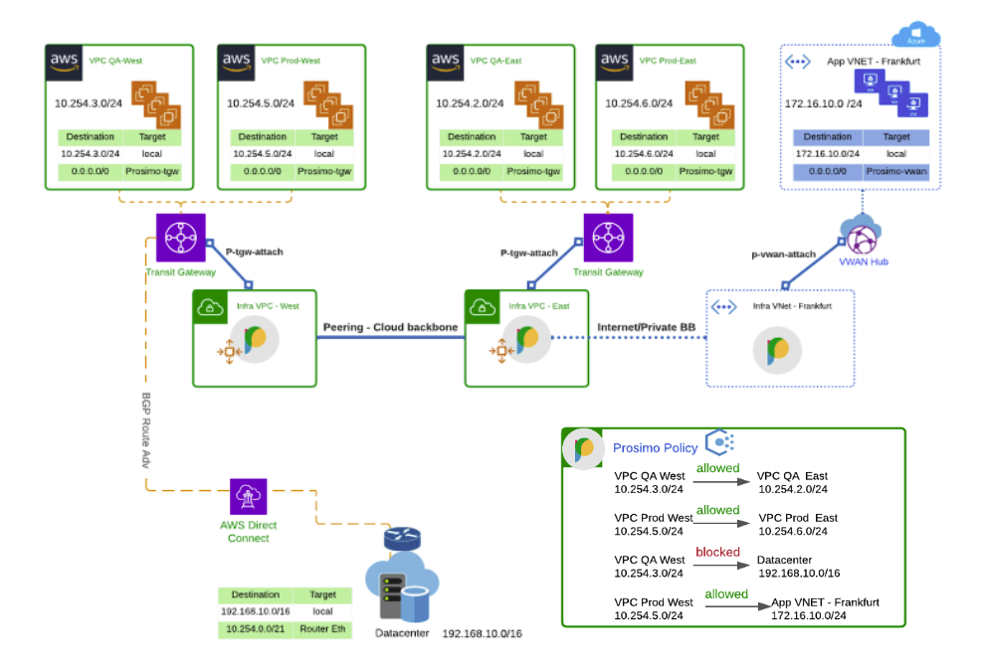

Network Transit

Prosimo’s network transit is built on an elastic Kubernetes architecture that works on the principles of cloud-native infrastructure. Whether you are using Transit Gateway in AWS or VNet peering in Azure, Prosimo orchestrates the connectivity between functions and clouds, selects the best possible path, and then provides you with a global view of your cloud networks and apps.

- Peering, connectivity, and orchestration at scale

- Easy insertion while co-existing with existing network constructs

- Simplified network transit – attach VPCs, VNets, or network segments then scale with consistent architecture

- Deep cloud and network visibility

- Day 0 through day n+1 automation

- Use Prosimo Policy enforcement engine for network layer segmentation between VPC, VNets, and segments across cloud regions, multicloud, and data center

Key Benefits when you build your network transit using Prosimo:

- Peering and connectivity orchestration at scale

- Easy insertion & co-exist with existing network constructs

- Simplified network transit – attach VPCs /VNets, and network segments then scale with a consistent architecture

- Deep Cloud and Network visibility

- Day-0, Day-N automation

- Use Prosimo Policy enforcement engine for network layer segmentation between VPC, VNets, and segments across Cloud regions, Multi-cloud and Datacenter.

- Migrate from BGP Mesh and advertising routes from DC to cloud or VPCs back to Datacenter using Prosimo On-Prem Edge footprint.

- Fewer route table entries to manage with Prosimo on-prem edges used as default gateways to connect VPCs to VRFs

- Build a secure and optimized network transit across your multi-cloud and data center/branch environments

- Prosimo Connector securely connects Network CIDR traffic from VPCs, VNETs, data centers, and branch offices to the Prosimo fabric

Multicloud Networking at Layer 3

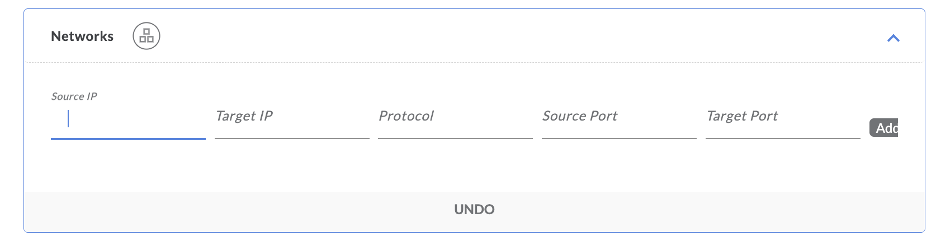

With IP layer multicloud networking you may connect your application islands at Layer 3-4 using IP subnet and port information through Prosimo cloud transit. Zero Trust policies ensure bi-directional communication between authorized IP subnets, and target port numbers are enforced at every AXI edge. Additional details regarding this feature include:

- Application to application traffic is not exposed to the public internet and is accessible via Prosimo Cloud transit using private addressing (RFC 1918 IP addresses).

- Zero Trust boundary between authorized IP subnets and ports

- Traffic optimization and IP layer insights for application flows

- Secure transport using Prosimo secure tunnel across cloud regions and multicloud

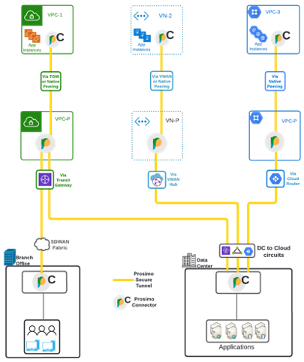

What does the connector do?

- A connector brings Layer 3 traffic to a Prosimo edge.

- It facilitates A2A (app-to-app) communication between Layer 3-4 applications and CIDRs within the Prosimo fabric.

- It is deployed within the VPC or VNET of an application configured as a “Source App” within the Prosimo fabric.

- It is available for deployment within AWS, Azure, GCP, and data centers.

- It helps optimize cloud connectivity by establishing a secure session with the most optimal edge within the Prosimo fabric.

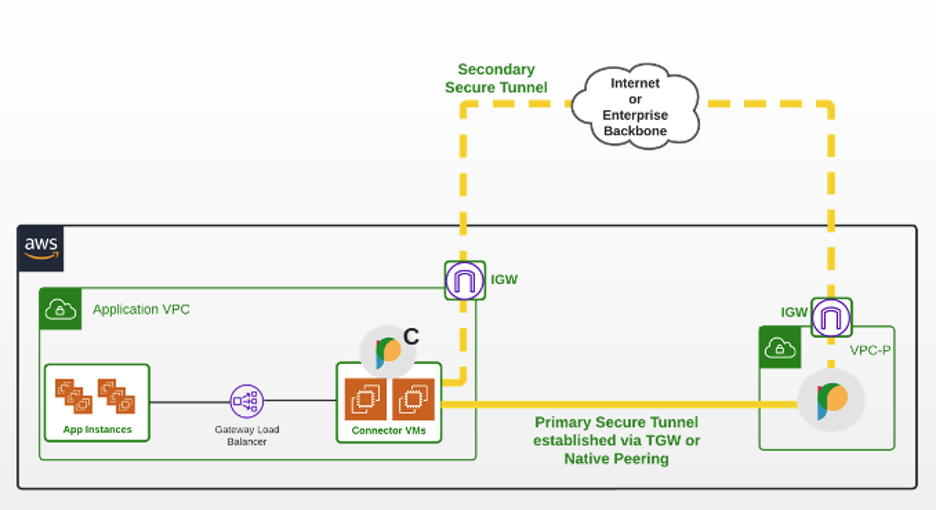

Example: Connector in intra-cloud (AWS)

Understanding the Prosimo Connector operation for intra-cloud:

- Prosimo orchestrates a set of virtual machines and a load balancer within application VPCs and VNETs.

- The connector VMs are configured as targets behind the load balancer.

- The connector VMs are configured as targets behind the load balancer.

- Routes are updated within the App VPC to attract traffic to the connector.

- The gateway load-balancer IP is the recipient of destination CIDR traffic.

- The gateway load-balancer IP is the recipient of destination CIDR traffic.

- The gateway load-balancer forwards traffic to the connector VM targets using GENEVE protocol native to AWS.

- The connector forwards destination CIDR traffic to the edge gateway.

- The connector and the edge communicate via an encrypted tunnel.

- The connector inserts Prosimo-specific meta information into the traffic.

- Connectivity between App VPC to Edge VPC can be over public or private connections.

- The connector identifies that private connectivity is present and prefers the private connection by default.

- If the private network connectivity fails or becomes unavailable the connector will automatically switch to the public option.

- The connector identifies that private connectivity is present and prefers the private connection by default.

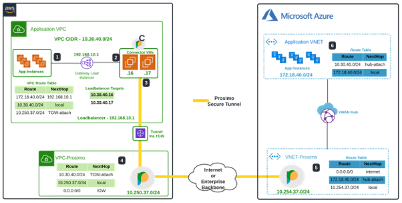

Example: Connector in inter-cloud (AWS to Azure)

Understanding the Prosimo Connector operation for inter-cloud:

- App instances in AWS(10.30.40.0/24) forward traffic for Azure App instances(172.18.40.0/24) to load-balancer at IP 192.168.10.1.

- The load balancer forwards the traffic to one of the connector VMs(10.30.40.16 or 10.30.40.17).

- The connector inserts Azure app traffic through an established tunnel to the Prosimo edge gateway.

- The tunnel is established through a TGW.

- The tunnel is established through a TGW.

- The Prosimo edge gateway in AWS(10.250.37.0/24) forwards Azure App Network traffic to the Prosimo edge gateway(10.254.37.0/24) in Azure using an established secure tunnel.

- The tunnel is established through the internet or private backbone.

- The tunnel is established through the internet or private backbone.

- The Prosimo edge gateway in Azure forwards applications network traffic in Azure through the VWAN Hub attachment.

- App instances in Azure process the traffic received. Any responses follow the reverse path back to the App VPC in AWS.

Note: Connector VMs forward the traffic directly to the App instances in AWS once it’s received from the edge gateway in AWS.

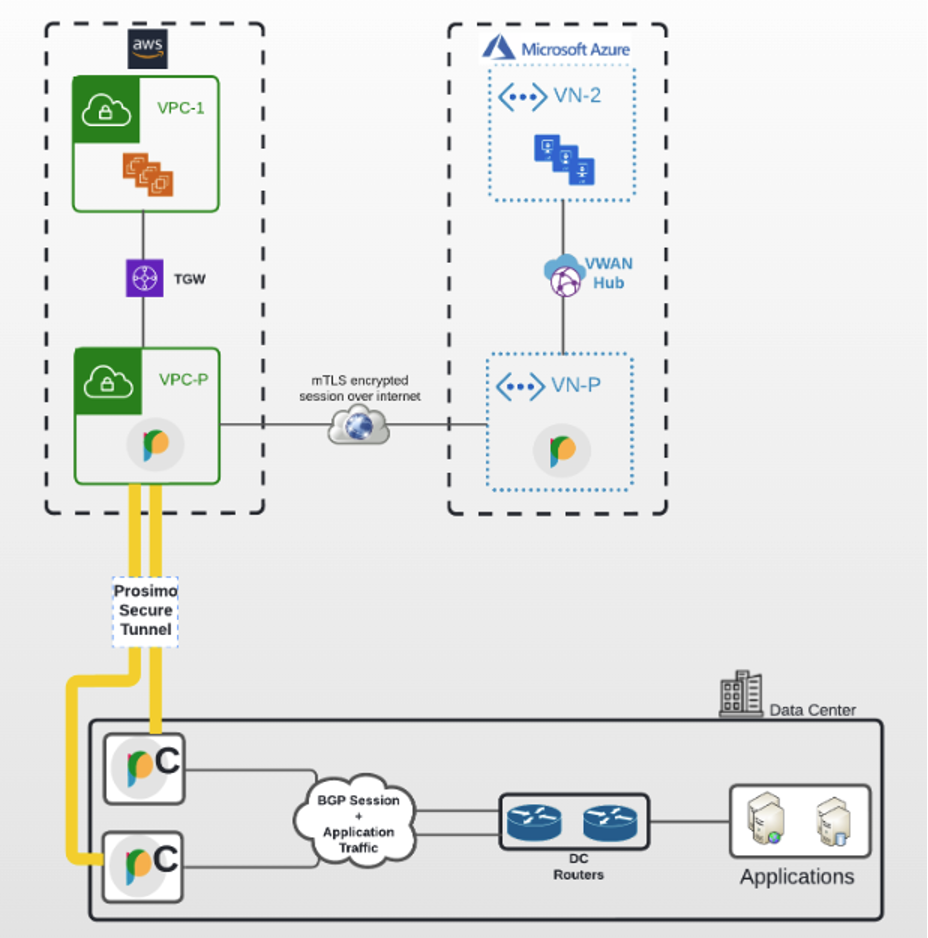

Example: Connector in hybrid cloud (private to multicloud)

Understanding the Prosimo Connector operation in hybrid cloud:

- The connector establishes BGP peering with the on-prem router.

- The connector advertises cloud app CIDRs with itself as next-hop.

- Each connector deployed requires a BGP session with the on-prem router.

- The connector establishes an outbound connection to Prosimo edge to forward traffic from DC.

- The connector advertises cloud apps CIDRs with itself as next-hop.

- Outbound connections can use private(e.g., direct connect) or public connectivity(e.g., internet).

- The BGP session allows the connector to receive traffic for cloud apps from data center applications and forwards it to the Prosimo edge gateway in the cloud.

- Prosimo edge gateway forwards traffic destined for data center applications to the connector.

- Prosimo edge gateway forwards traffic destined for data center applications to the connector.

- BGP is used only within the data center for LAN traffic; Prosimo orchestrates routing/connectivity within the cloud transit.

- Connectors may be deployed in data center and branch office environments that support VMDK, ISO, and QCOW2 images.

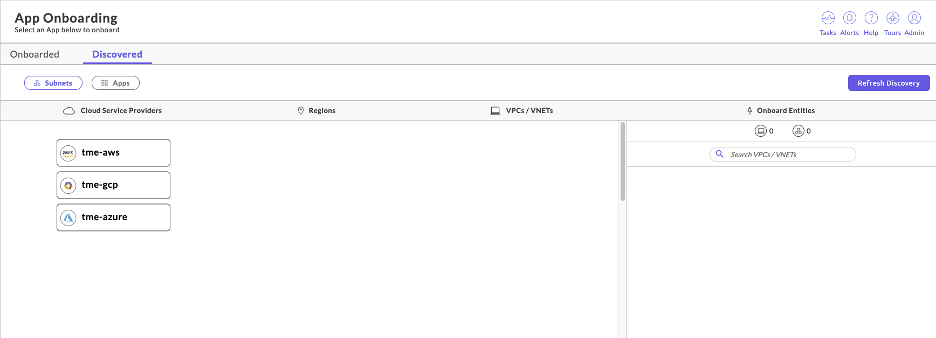

Automated onboarding

You may use cloud-led onboarding across globally distributed regions using which, different app teams can create new VPCs or VNETs. Cloud infrastructure teams can leverage a single resource to track infrastructure and onboard from any cloud, any region to the transit. Prosimo provides the following services to facilitate onboarding of cloud assets:

Cloud Asset Discovery – gives enterprises the ability to discover all cloud assets, enable single-click onboarding for cloud network assets, and create network transit for hybrid, inter-region, and cross-cloud with segmentation.

Cloud-Native Orchestration – simplifies orchestration across any cloud service provider environment, including AWS Cloud WAN, Azure Virtual WAN, and Private Link.

Understanding automated onboarding:

- You will need to onboard your public cloud credentials. (It is assumed that VPCs and subnets already exist within the cloud account.)

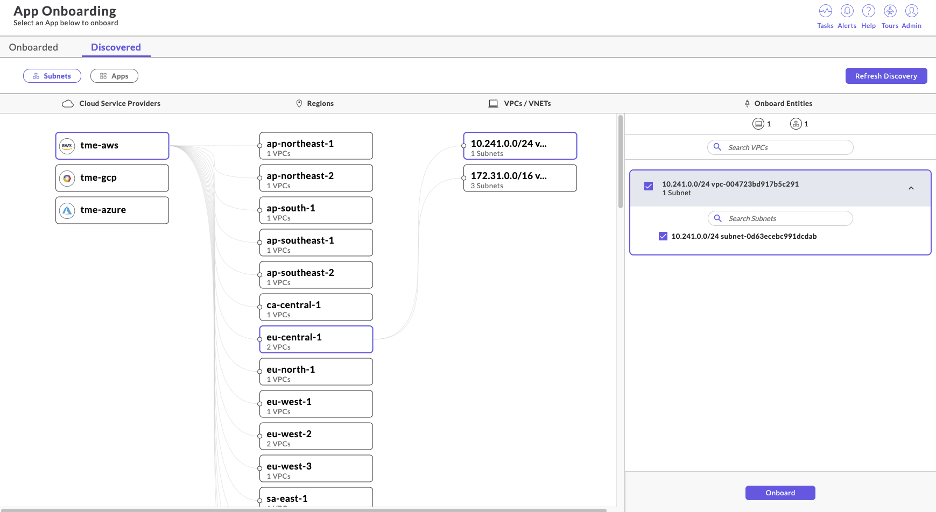

- Once you select the respective CSP account (as seen below), a list of regions and associated VPCs are displayed.

- You may now select the respective region where the subnet(s) and application endpoints reside.

- Once you click Onboard, a bidirectional application endpoint is added to the Prosimo fabric.

As seen in Figure 10, the Cloud Asset Discovery displays the available VPCs and VNETs as well as their respective subnets.

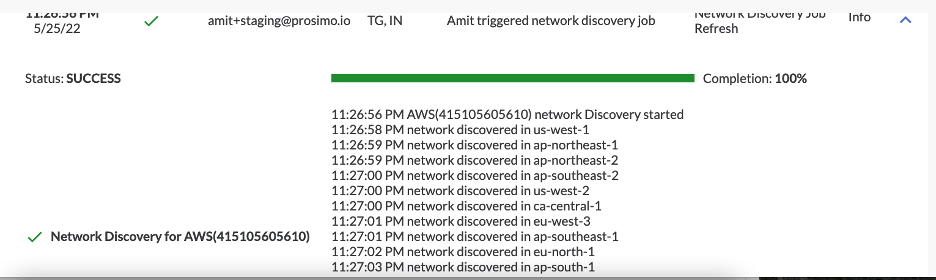

Refreshing the view will discover any changes to the cloud asset inventory as shown in Figure 11.

Network onboarding

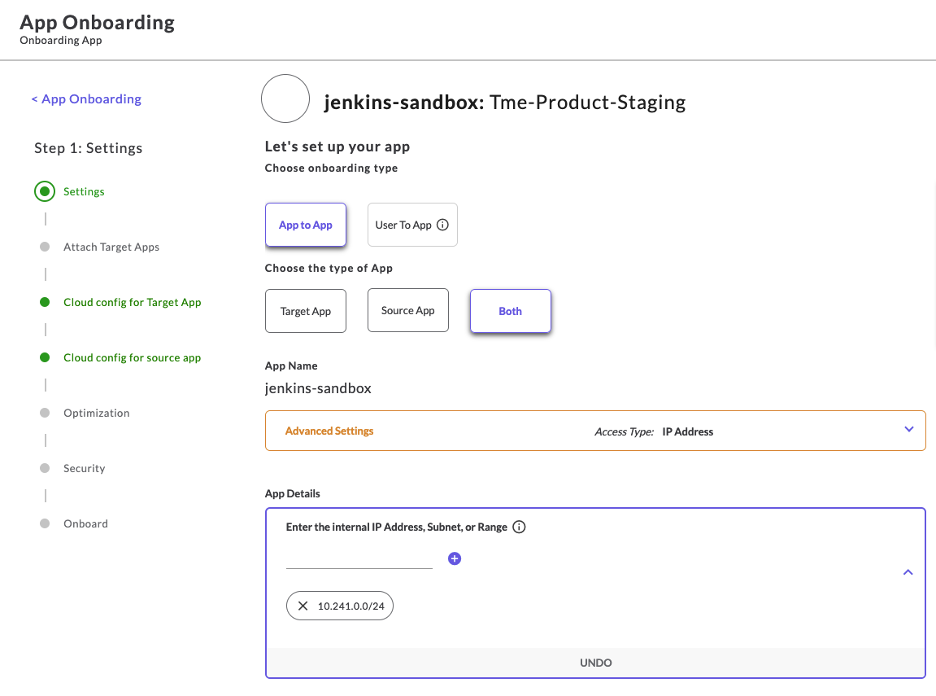

As you complete the discovery of cloud assets, you may now onboard endpoints via their subnet(s), individual IP addresses, or a range of IP addresses. Traffic flow is bi-directional by default; you may send traffic from the source application towards the target application and vice-versa.

Keeping in mind the previous AWS to Azure traffic flow:

- The VPC CIDR in AWS has been onboarded to the Prosimo fabric(through the TGW) as a source CIDR endpoint. Prosimo orchestrates a set of connectors VMs and load-balancer with associated endpoint configuration within this VPC.

- The VNET CIDR in Azure has been onboarded (through the VWAN hub) to the Prosimo fabric as a target CIDR for the source CIDR in AWS. No Connectors are orchestrated within this VNET.

- Connector VMs establish a secure tunnel to the Prosimo edge gateway deployed in the same AWS region. Prosimo Edge gateways in AWS and Azure establish bidirectional, secure tunnels.

- Prosimo also modifies VPC/VNET routing tables to ensure the bidirectional flow of traffic through connectors and Edge gateways.

Note: Specific controls can be added via Policy under the network section to ensure only certain IP ranges and port numbers are made accessible.