Introduction

Since eBPF was first introduced to the Linux kernel in 2017, the technology has quickly evolved to become one of the most versatile tools to help application developers and system administrators to observe kernel and modify network applications. The set of eBPF capabilities, such as kernel tracing and XDP, makes it very attractive on high-performance network computing platforms. Integrating eBPF into the product has opened up a world of possibilities that were not possible before. In this blog post, we will share our experience of using eBPF technology to solve real-world problems.

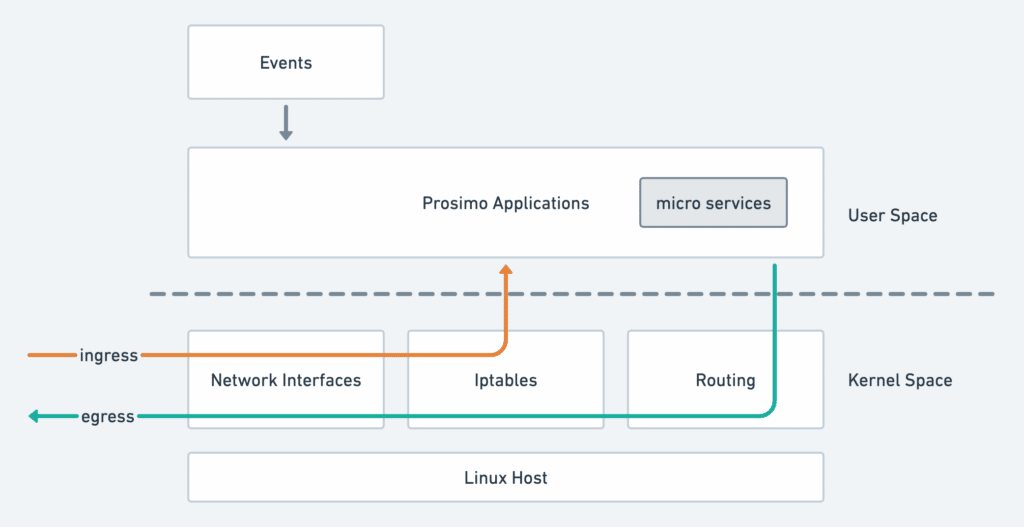

At Prosimo, we process Enterprise applications at scale. Broadly, our technology stack consists of users and applications and kernel functions on Linux computing clusters:

In this diagram, the ingress line indicates how we process incoming packets; the egress line indicates how an outgoing packet flows through the system. Note that this is an oversimplified view of the Prosimo system for illustrative purposes.

The user – applications, generally speaking, respond to external events such as configuration or policy pushes, and install into various parts of the system. They consist of an armada of microservices, individually responding to events applicable to itself.

This system works well and allows Prosimo to quickly adapt to customer requirements by leveraging the microservices architecture. However, as our technology needs to expand beyond the traditional building blocks of what the Linux networking stack can do, this system feels inadequate. For example, Iptables is a common way to create network ACL rules; however, it is not easy to create Iptables rules using custom conditions outside of what is supported.

That is where eBPF comes to rescue.

eBPF, in a nutshell

eBPF, or Extended Berkeley Packet Filter, is a Linux technology that safely installs kernel functions at runtime. The safety guarantee is provided by the eBPF verifier, where any eBPF program is checked before it is allowed to be installed in the running system.

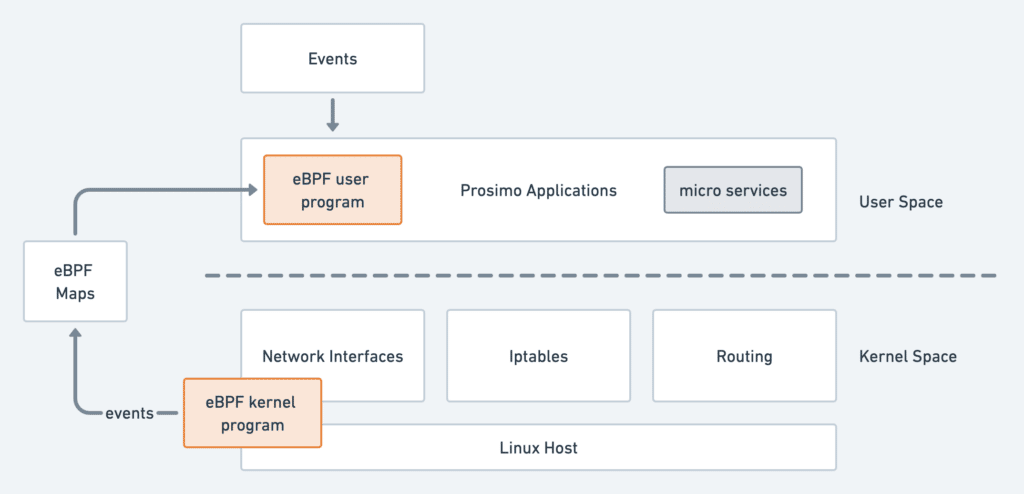

These eBPF programs can either be inserted into the networking subsystem to process network traffic or added as probes into various kernel functions to observe how the system behaves. A shared memory space, or eBPF map, is used to communicate between the user and kernel space to facilitate information exchange. Therefore, an eBPF program is typically split into a user and a kernel program.

How Prosimo Uses eBPF Today

Prosimo uses the eBPF technology to extend the networking capabilities of Linux while maintaining seamless interoperability with the existing network functions. We also use eBPF to observe and debug the system.

We can achieve these by inserting eBPF programs into various parts of the kernel. This essentially allows us to respond to kernel events from the bottom-up rather than the legacy top-to-bottom event push:

Because eBPF is complementary to the existing kernel functions rather than a replacement, we can gradually add eBPF functionalities when necessary. The list of use cases is growing; here, we present a few use cases currently running on Prosimo.

Packet Decapsulation

eBPF allows us to manipulate raw packet data before it enters the networking subsystem via the XDP network framework. XDP, or eXpress Data Path, is the ability to run eBPF programs on the raw packet data as soon as a packet is received. This property makes XDP extremely high-performance and is a clear choice when designing for scale.

UDP encapsulation, such as VxLAN or Geneve, is a common approach to encapsulate the original packet into a tunnel. In Prosimo, packets are sometimes UDP-encapsulated from one hop to another. This means that a packet must be decapsulated at every hop to restore its original form.

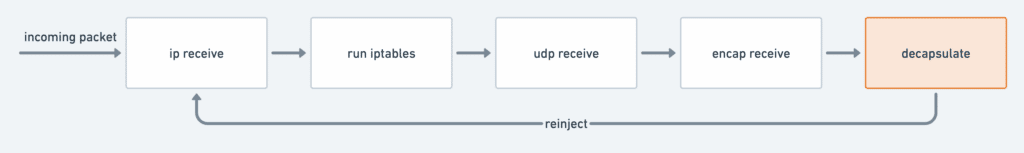

Without eBPF, this is the chain of kernel functions that a packet needs to go through to decapsulate a UDP-encapsulated packet:

Here, a packet goes through all the function chains only to find that the outer packet layer should be discarded. The outer layer is stripped off the encapsulation header and reinjected into the network stack. This means that every UDP-encapsulated packet goes through the network path twice.

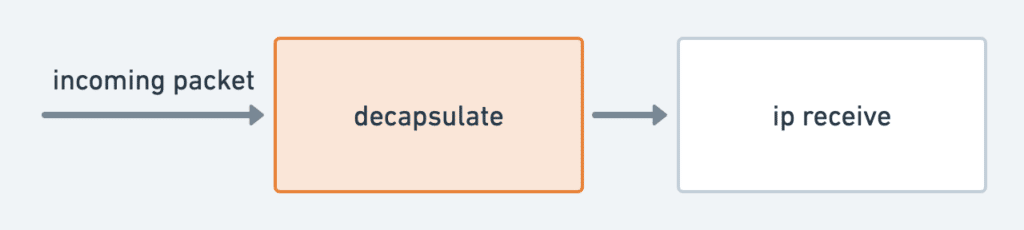

With eBPF/XDP-based approach, a packet is checked and stripped before the networking stack receives it. The networking stack continues to send the packet up the stack for further processing. Therefore, a UDP-encapsulated packet using eBPF/XDP incurs very little performance overhead:

Service Chaining

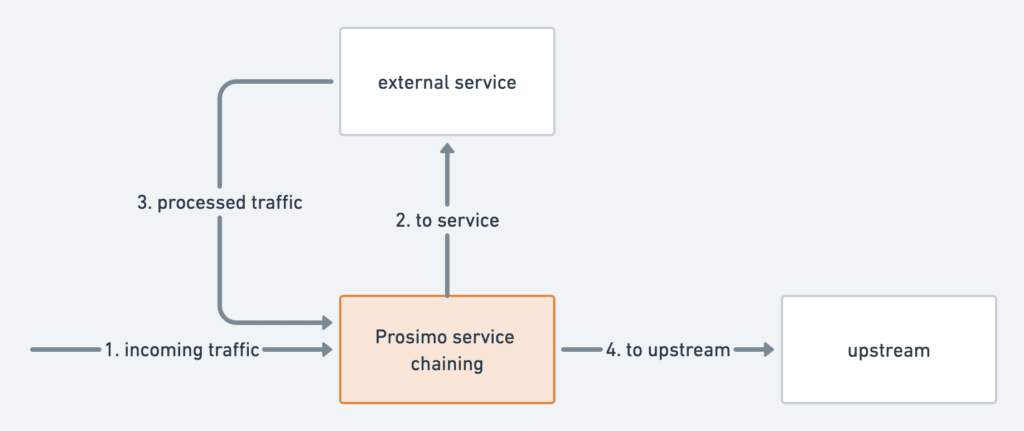

Service chaining in the cloud typically consists of forwarding unmarked traffic towards the service, while forwarding marked traffic towards the upstream:

For services such as Firewalls, the packet should be unmodified towards the service, and the service returns traffic to us unmodified. This means we must identify the incoming paths based on something other than the packet header and take the routing decision differently.

The way we solve this problem is to make use of a combination of eBPF and Linux kernel’s policy-based routing tables. In this case, the incoming packet is identified and marked inside the kernel with eBPF/XDP, and the routing decision is modified based on this packet marker. With this approach, the first pass of forwarding incoming traffic toward the external service is efficient, and the service chaining module coexists with the rest of the system.

Although it is possible to implement service chaining using Linux’s Iptables and to route infrastructure, we decided to go with the eBPF/XDP approach. In addition to performance, solving the service chaining allows us to integrate with other network components seamlessly. And, of course, the performance benefit is unmatched.

Network Policy

Another way we use eBPF is for security. In Prosimo, we provide a rich set of security policies, some of which can be executed early in the networking stack, so unwanted connections are rejected. Running policy as early as possible provides not only performance, but it is also good security practices.

We, therefore, implement the network ACL-based policy using eBPF. Every connection is checked against the set of network ACLs and is rejected early before reaching the network stack. These policy events are received by the user space application and sent upstream to our analytics pipeline. An accepted connection continues to flow through the network stack, and all the other network functionalities continue to run.

The use of eBPF in the network policy is a gradual migration. The legacy network policy coexists with the eBPF-based network policy. This allows backward compatibility and a smooth migration path.

Debugging and Observability

eBPF has proved to be a handy tool for debugging and kernel observability. At Prosimo, we have leveraged eBPF to help us with various debugging scenarios and improve our system’s observability. We have packaged the eBPF tracing tool into the product and created additional trace programs specifically tailored to debug our system.

Using eBPF in debugging gives us the following advantages:

- Low-performance overhead

Running eBPF tracing has a very low-performance overhead. Tracing can typically run directly in the production network compared to full packet capture without a visible performance hit. - Dynamic loading

A tracer program can be loaded dynamically and unloaded after completing the debugging session. The tracer program can be modified to fit the specific debugging needs without reloading images into the running system. - Kernel visibility

eBPF tracer installs probes into the kernel functions. This gives eBPF unmatched visibility inside the kernel. For example, packet capture gives us visibility when the packet enters or leaves the system; running the eBPF tracer inside the kernel functions gives us insight into the packet as it passes through the network stack. We have often used this capability to debug and diagnose system problems.

Summary

At Prosimo, we have integrated eBPF into various parts of our technology stack. This allows us to build services not possible using the traditional Linux networking kernel. With eBPF integrated into the Prosimo technology stack, we can quickly expand the set of use cases and bring in more features and functionalities to build a better product.

References

- eBPF – https://ebpf.io/

- BPF tracing tool – https://github.com/iovisor/bpftrace

- Redhat developer blogs – https://developers.redhat.com/blog

- Linux Kernel archive: A thorough introduction to eBPF – https://lwn.net/Articles/740157/

- Brendan Gregg’s eBPF observability blog – https://www.brendangregg.com/blog/